Using PDSA to adapt Pocket QI training for virtual delivery

- Overview

- Quality Improvement Department ‘tests’ virtual Pocket QI training

- QI Department ‘ Virtual Pocket QI – Webinar Test’ Cycle 2

- QI Department ‘ Virtual Pocket QI – Webinar Test’ Cycle 3

Share this:

In this collection you will find three stories on how we used a PDSA (Plan Do Study Act) approach to test and learn how to adapt our Pocket QI training course, normally delivered face-to-face in groups of up to 40, into a virtual course online.

In this collection you will find three stories on how we used a PDSA (Plan Do Study Act) approach to test and learn how to adapt our Pocket QI training course, normally delivered face-to-face in groups of up to 40, into a virtual course online.Quality Improvement Department ‘tests’ virtual Pocket QI training

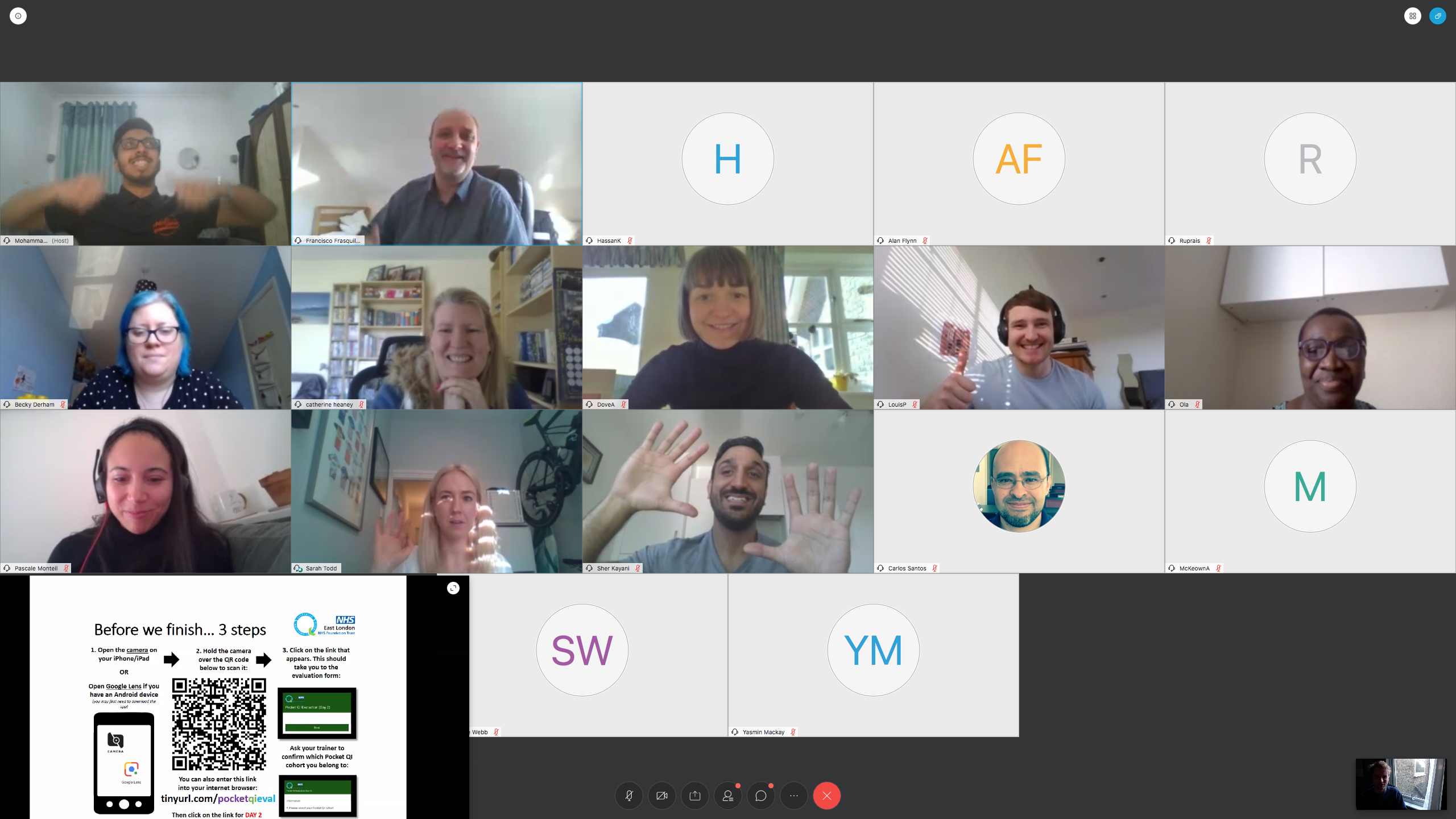

During March we have all been presented with many differing challenges, one such challenge for the QI Department was to explore how we continue to build skills for QI within ELFT during such a challenging time. As with other areas in the trust we must rapidly adapt to the current situation. So, on the 25th March the QI department hosted module1 of ‘Pocket QI’ (cohort 30) for 22 people virtually for the first time. However, our team recognised that to achieve a positive and interactive experience for delegates, we would need to adapt our content and delivery for a virtual event, utilising the technology available and learning how to do this very quickly.

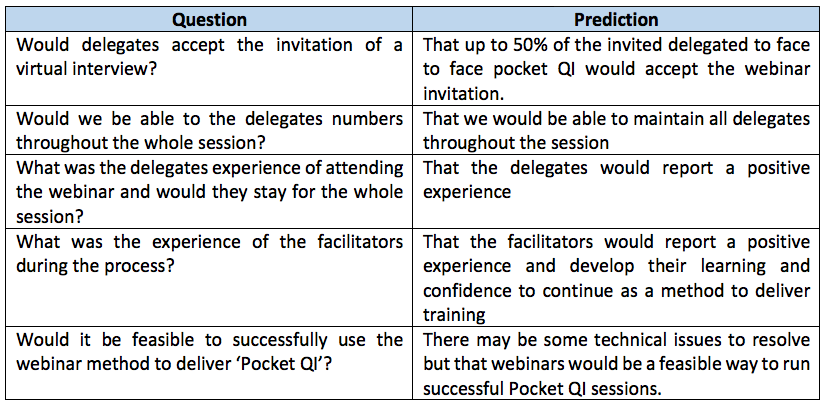

Our theory was that we would be able to successfully conduct a virtual webinar teaching session to an unlimited number of people.

In our test we asked a series of questions and made predictions

How did we do this?

We used the Plan, Do, Study, Act (PDSA) approach which informed our learning

Plan: Plan the test, including the plan for collecting data

Plan: Plan the test, including the plan for collecting data

We want to conduct webinar training virtually and tested the method for one session over a period for 4 hours.

We predict that candidates would accept invitation and that method would be well received. In addition, that the interviewers would find it an effective way conduct the recruitment process.

- Who: Up to 30 Delegates, three improvement advisors and two technical team members.

- What: Inform, prepare and invite the delegates; conduct webinar using WebEx as virtual platform

- When: 25th March for 4 hours

- Data: Number of delegates accepting invitation; feedback from both delegates and facilitators; review of technical issues.

Do: Run the test on a small scale

Do: Run the test on a small scale

What happened?

- Both team members (IA’s) scheduled to facilitate we unable to attend so two other IA’s stepped in to take over

- 22 out of 30 delegates accepted the invitation to attend the webinar.

- The webinar started as planned with 30-minute sign up time prior to start

- Facilitators asked for interaction from delegates throughout the session

- Some technical difficulties were experienced but resolved in real time

- Session lasted 2 hrs 45 mins inclusive of 3 x 10-minute breaks

- Delegates asked to rate their experience by answering “Would they recommend virtual Pocket QI?”

Study: Analyse the results and compare to the predictions

Study: Analyse the results and compare to the predictions

- Webinar conducted on time and successfully

- Minimal issues with technology but resolved quickly

- 22 delegates (>50 %) started the session with 19 completing the session (fig. 1)

- Feedback from delegates (fig 2)

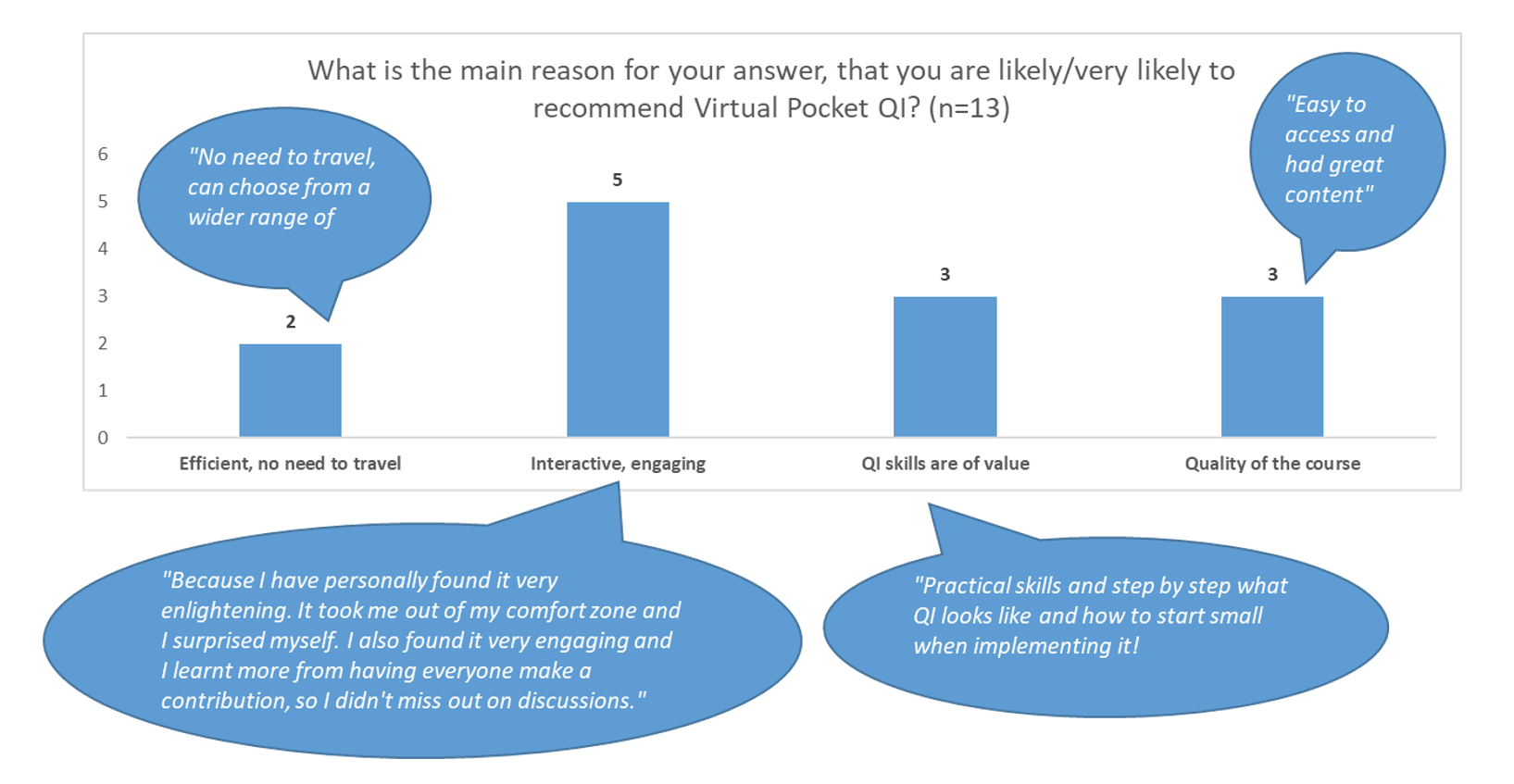

- 13 delegates would recommend virtual QI webinar citing reasons (fig 2.)

- Feedback from facilitators (fig 3)

- Established key learnings (fig. 4)

Figure 1: Number of delegates starting and completing session

Act: We can adapt, abandon, adopt – plan for your next test in April

Act: We can adapt, abandon, adopt – plan for your next test in April

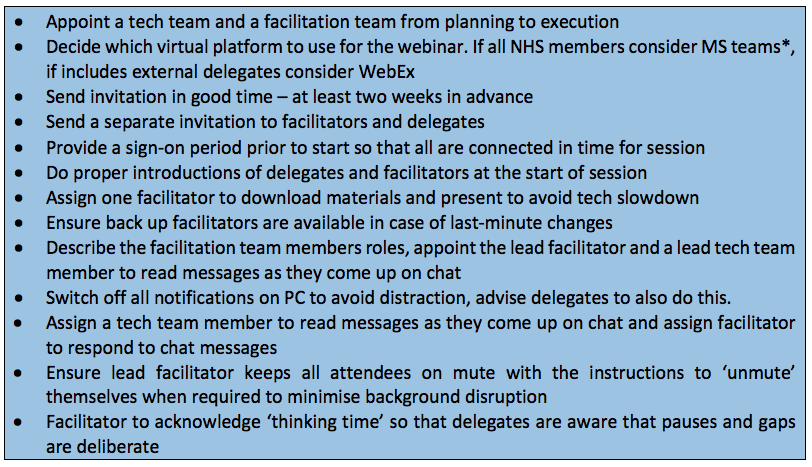

We will adapt and use our key learnings in figure 4 will inform our next PDSA cycle.

Figure 3. Feedback from the facilitators

Figure 4. Key learning and recommendations:

This is useful for sharing just this resource rather than the whole collection

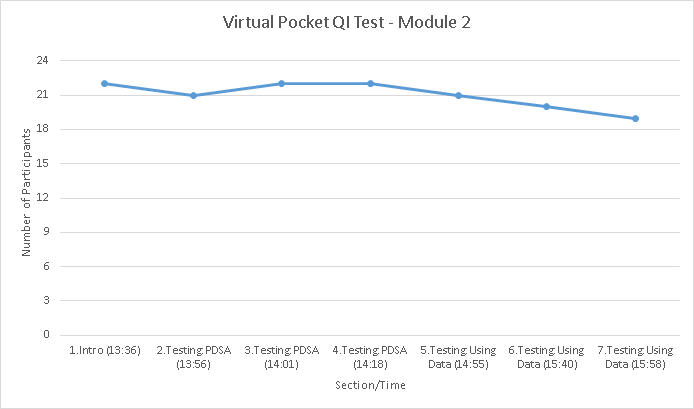

QI Department ‘ Virtual Pocket QI – Webinar Test’ Cycle 2

By Shuhayb Mohammad Ramjany – Training & Programme Support Officer/Quality Improvement Coach (Corporate)

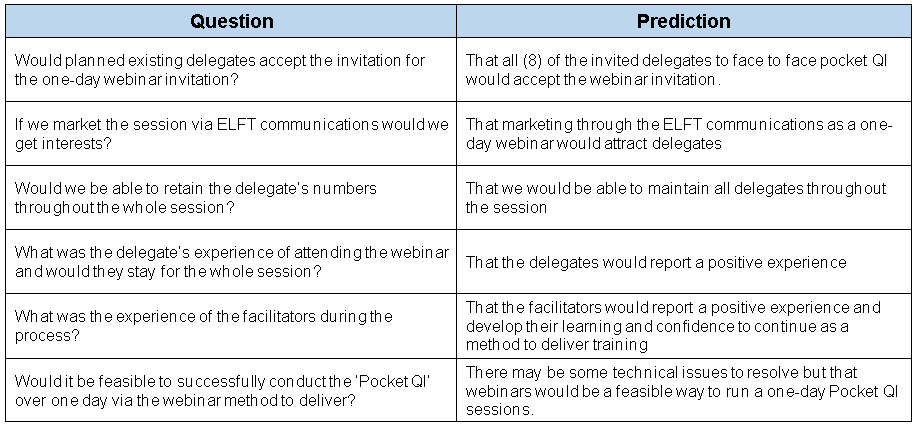

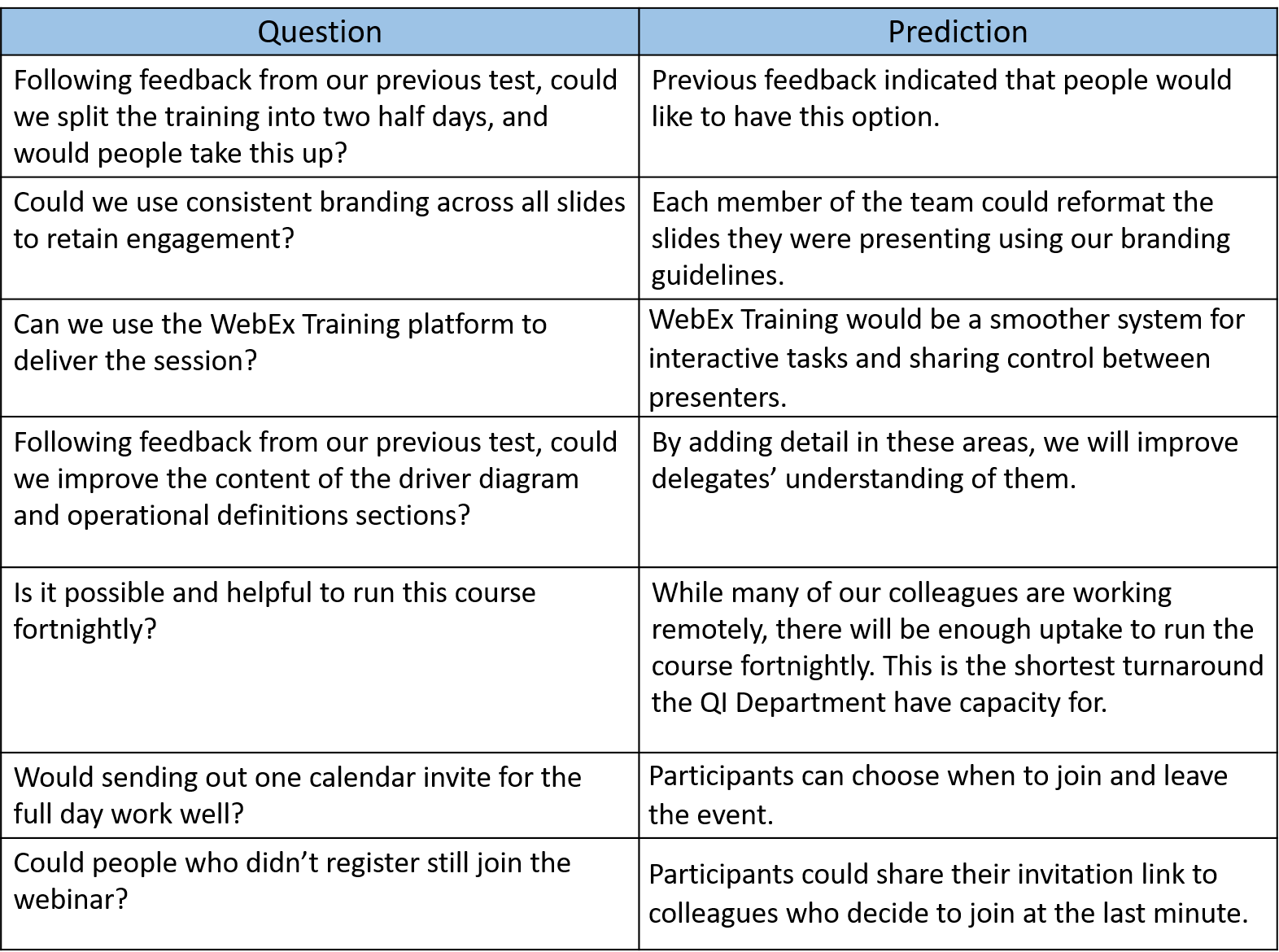

Following on from the first cycle test in March for the ‘Webinar Pocket QI’ some adaptations were made as described in the first PDSA. The theory was that the QI department would be able to successfully conduct the pocket QI course over a one-day webinar session to an unlimited number of people. The department wanted to open the course Trust-wide and see who would attend. Below is a table of some of the expectations, in the test. To start a series of questions were asked and predictions were made:-

Plan: Plan the test, including the plan for collecting data

The aim was to conduct Pocket QI training over one day via webinar training virtually. The prediction was that existing delegates would accept the invitation and with marketing, more delegates may be signed up. Also, hosting training over one day would be more time-efficient and perhaps enable more regular webinars in the future.

- Who: 8 delegates and those who sign up, six improvement advisors and one technical support team member,

- What: Inform, prepare and invite delegates; advertise the session on the ELFT daily message.

- How: conduct practice session and design a detailed agenda. Conduct one day (2 sessions) webinar using WebEx as a virtual platform and modified existing training material. Different facilitators would take turns with sessions.

- When: 21st April for 6 hours (1-hour break and 2 mini breaks)

- Data: number of delegates accepting the invitation; the number of extra signups through ELFT communications, qualitative feedback from the delegates and the facilitators.

Do: Run the test on a small scale What happened?

- Conducted a practice session to practice hand over

- The existing 8 delegates accepted the invitation, an additional 20 delegates signed up and a surprise additional 11 joined who hadn’t signed up – 30 delegates were from ELFT and 9 were externals. A total of 39 joined the session and at the end of the session, 29 were still online.

- The webinar started as planned with 30-minute sign up time before the start

- Facilitators asked for interaction from delegates throughout the session which aside from some technical issues most delegates were very responsive

- Some technical difficulties were experienced but resolved in real-time

- 1 facilitator lost internet connectivity, another facilitator took over in their place

- The session lasted 7.5 hours inclusive of setting up and call to order 30-minutes, 3 x 10-minute breaks and a 60-minute lunch.

- Delegates were asked to rate their experience by answering “Would they recommend virtual Pocket QI?”

- Webinar conducted on time and successfully

- Issues with technology inhibited flow including difficulties with internet connections, sound not audible through delegate devices, presentations freezing and difficulties with passing over presentations from facilitators.

Study: Analyse the results and compare to the predictions

Study: Analyse the results and compare to the predictions

- 8 delegates accepted, 20 signed up and an extra 11 external delegates attended from a partner collaborator

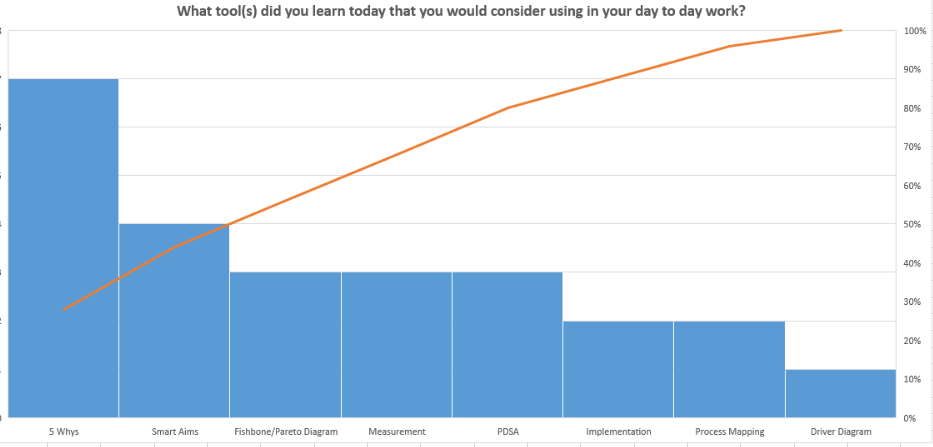

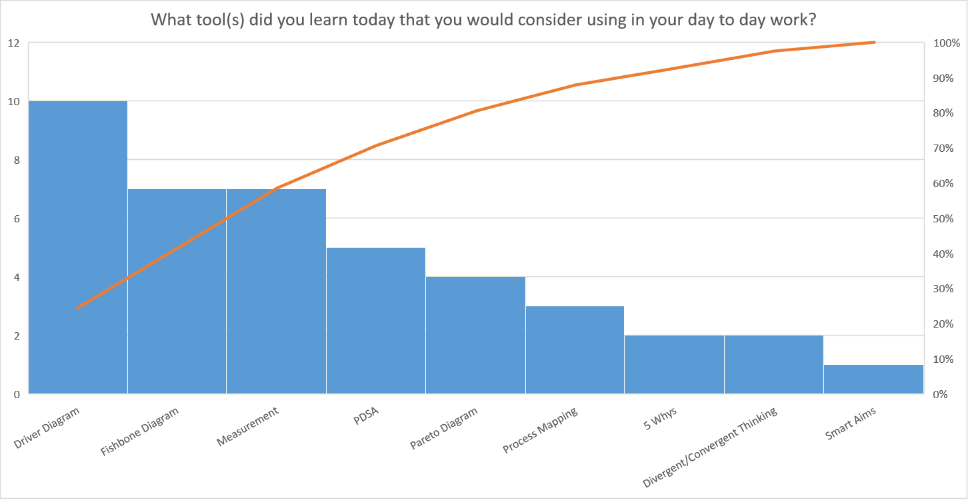

- Delegates were asked via ‘MS forms’ what tool(s) they would consider using in their day to day work (Fig.1)

- Delegates provided some general feedback (Fig.2)

- Delegates were asked, “Would they recommend virtual pocket QI?” – 28 delegates responded – 67.9% said they would ‘very likely’ recommend virtual pocket QI and 32.1 % said they were ‘likely’ to recommend virtual pocket QI.

- Some useful feedback was provided from facilitators (Fig.3)

- Key learnings and recommendations were highlighted (Fig.4)

Act: The next test cycle will be conducted on the 12th May as a full-day webinar but the test will be adapted using a different virtual platform and facilitators will join in a shift system to reduce the burden of time on the team.

Figure 1. Pareto chart to demonstrate which tool the delegates would use after the session

Figure 2. Delegate feedback

Figure 3. Facilitator’s feedback

Figure 4. Key Learning and Recommendations

This is useful for sharing just this resource rather than the whole collection

QI Department ‘ Virtual Pocket QI – Webinar Test’ Cycle 3

With colleagues and service users spending more time at home during the lockdown, the QI Department decided to offer a ‘Virtual Pocket QI’ webinar every two weeks. People were given the option of staying for the full day or splitting the course across two days, which is how it was previously run in person. The theory was that this would suit those with stamina as well as those who would like time to digest different parts of the course and those who work morning or evening shifts.

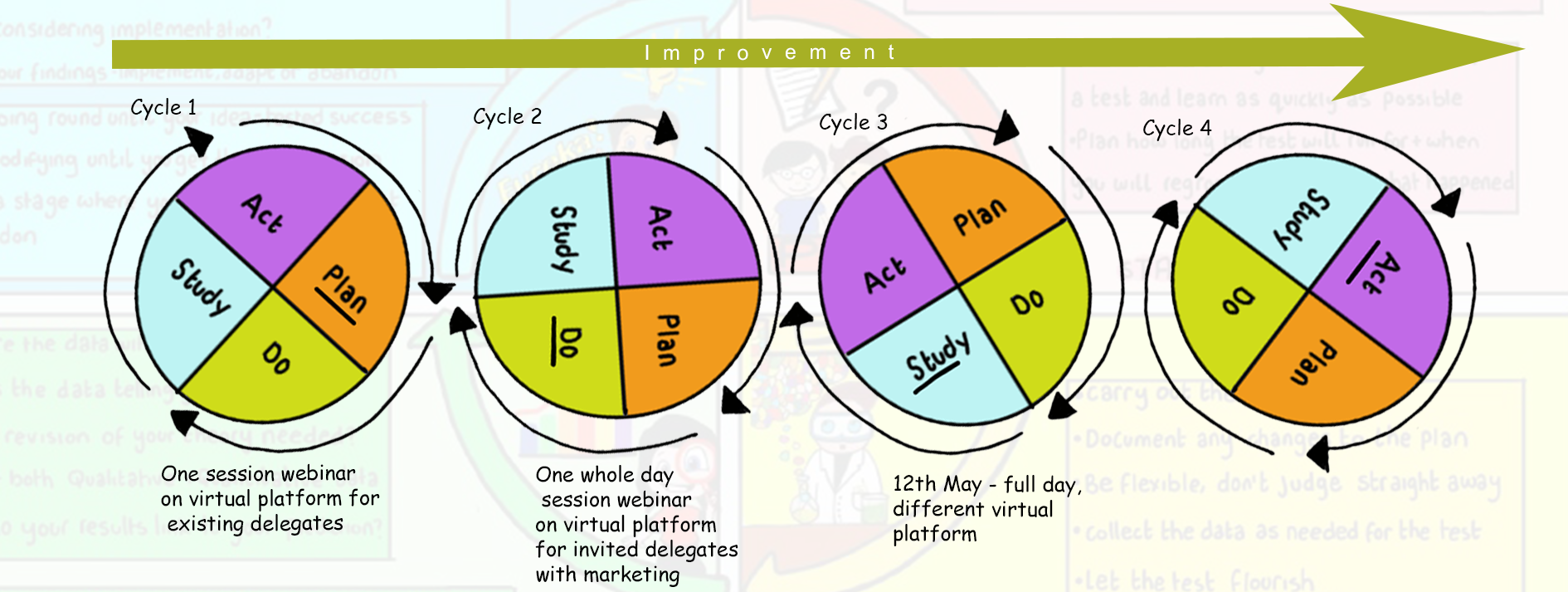

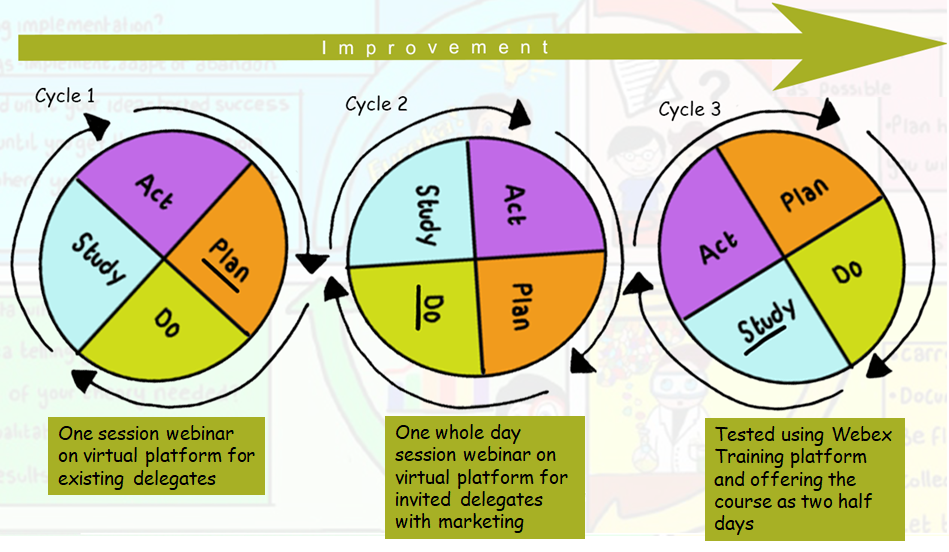

We had used the Plan, Do, Study, Act (PDSA) cycle to test the webinar in March and April and here is our third iteration. This method of building on previous learning is illustrated on a PDSA ramp (Figure 1).

Plan: Plan the test (including the plan for collecting data)

We planned to test the use of the WebEx Training platform within our team and predicted that we could use it for the webinar. We improved the clarity of some of the content following feedback from the previous session. The data collection plan to use Microsoft Forms remained the same.

- Who: The webinar was facilitated by one of the Associate Directors, five Improvement Advisors and one technical support team member.

- What: We advertised the event and how to register, updated the slides and for each module we assigned facilitators and back-up facilitators with two weeks’ notice.

- How: Facilitators met on the WebEx Training platform to try out the functions. It was smooth in handing control from one presenter to the next, but delegates could not write in the chatbox or unmute themselves. We decided the platform was not interactive enough for us to use until we establish whether these restrictions can be overcome.

- When: 12th May 9:30 – 1 pm and 2 pm – 4:30.

- Data: number of delegates accepting the invitation; the number of extra signups through ELFT communications, qualitative feedback from the delegates and the facilitators.

Do: Run the test on a small scale. What happened?

Do: Run the test on a small scale. What happened?

- We used WebEx Meeting Pro to host the session.

- A total of 35 joined for both sessions, 26 attended part 1 and 2 and 9 people attended only for the morning session.

- We started by asking delegates what they would like to change about their service.

- We hosted the slides from a computer at ELFT headquarters.

- Facilitators joined the webinar for their teaching modules.

- We nominated a member of the team to monitor and respond to the chatbox.

- We added in 5 minute breaks about once an hour.

Study: Analyse the results and compare to the predictions

Study: Analyse the results and compare to the predictions

- There was good communication with participants who were experiencing technical issues.

- All the feedback about the delivery, content and interactivity was very positive.

- Running Part 1 in the morning and Part 2 in the same afternoon is time-efficient, meaning we are able to offer the course every 2 weeks.

- We had 7 participants who attended part 1 without registering and 7 participants attended part 2 without registering

- The slides were blurry, making them difficult to read. This could have been because of the host’s screen resolution.

- There were pixelated boxes on the screen to hide part of the host’s display, which delegates found distracting.

- Some participants experienced audio technical issues with the PDSA video.

- The feedback QR code still showed Day 1 instead of part 1, which could have been confusing.

- The celebration of this event was noticed on Twitter and the QI Department has been invited to present at a QI Community webinar on how to deliver virtual training.

- One of the video links would not play from the host’s computer, so the presenter simply posted the link in the chat and gave delegates time to watch it together.

Act: adapt, adopt or abandon change ideas

Act: adapt, adopt or abandon change ideas

- To overcome some of the problems, such as the pixelated boxed showing on the screen, the team will work with people from the WebEx Training platform to establish how it could work for us.

- The background behind the text will be changed to light colours so it is easy to read.

- We will animate the driver diagram slide to make it more engaging.

- The feedback QR code will be changed to ‘Part 1’ and ‘Part 2’.

- Some of the facilitators will be presenting their learning and experience of virtual training at a QI Community webinar.

Figure 1. Rapid Cycle Ramp

Figure 2. Pareto chart demonstrates which tool the delegates would use in their day to day work

Figure 3. Facilitator’s feedback

Figure 4. Delegate’s feedback

Figure 5. Key Learning and Recommendations

This is useful for sharing just this resource rather than the whole collection